scatteredPapers

零散的论文合计

读论文一直都是一件重要的事情,论文的来源、阅读论文的详细程度、阅读关注点,以及必要时的代码速读都是很重要的。

本文主要是记录自己的一些论文速度的Takeaways,方便作为自己的灵感的来源。代码速度可以使用cursor,确实很方便。

ACE : Off-Policy Actor-critic with Causality-Aware Entropy Regularization:使用因果分析得到reward和每一个action dim 上的相关性权重

论文的实验很充分,简单看了一下代码,做一下摘录。宏观上看,ACE在SAC上面进行修改,比如在策略采样action那部分:

GaussianCausalPolicy

class GaussianCausalPolicy(nn.Module):

def __init__(self, num_inputs, num_actions, hidden_dim, action_space=None):

# ...

def forward(self, state):

x = F.elu(self.linear1(state))

x = F.relu(self.linear2(x))

x = F.relu(self.linear3(x))

mean = self.mean_linear(x)

log_std = self.log_std_linear(x)

log_std = torch.clamp(log_std, min=LOG_SIG_MIN, max=LOG_SIG_MAX)

return mean, log_std

def sample(self, state, causal_weight=None):

mean, log_std = self.forward(state)

std = log_std.exp()

normal = Normal(mean, std)

x_t = normal.rsample() # for reparameterization trick (mean + std * N(0,1))

y_t = torch.tanh(x_t)

action = y_t * self.action_scale + self.action_bias

log_prob = normal.log_prob(x_t)

# Enforcing Action Bound

log_prob -= torch.log(self.action_scale * (1 - y_t.pow(2)) + epsilon)

#* compute causal weighted entropy

if causal_weight is None:

causal_weight = self.causal_default_weight

causal_weight = torch.from_numpy(causal_weight).to(log_prob.device).clone().detach()

log_prob = log_prob * causal_weight.unsqueeze(0)

log_prob = log_prob.sum(1, keepdim=True)

mean = torch.tanh(mean) * self.action_scale + self.action_bias

return action, log_prob, mean

这里的causal_weight是根据因果分析得到的,具体的dim为[action_dim]。agent的更新完全按照SAC的范式,只是sample的时候多了一个causal_weight,代表我们对不同action dim的重视程度。

至于这个causal_weight是怎么得到的,“调包”——基本思路就是通过某种计算方式得到reward和每一个action dim的相关性权重,数据来源是replay buffer。

因果分析的代码

def get_sa2r_weight(env, memory, agent, sample_size=5000, causal_method='DirectLiNGAM'):

states, actions, rewards, next_states, dones = memory.sample(sample_size)

rewards = np.squeeze(rewards[:sample_size])

rewards = np.reshape(rewards, (sample_size, 1))

X_ori = np.hstack((states[:sample_size,:], actions[:sample_size,:], rewards))

X = pd.DataFrame(X_ori, columns=list(range(np.shape(X_ori)[1])))

if causal_method=='DirectLiNGAM':

start_time = time.time()

model = lingam.DirectLiNGAM()

model.fit(X)

end_time = time.time()

model._running_time = end_time - start_time

weight_r = model.adjacency_matrix_[-1,np.shape(states)[1]:(np.shape(states)[1]+np.shape(actions)[1])]

#softmax weight_r

weight = F.softmax(torch.Tensor(weight_r),0)

weight = weight.numpy()

#* multiply by action size

weight = weight * weight.shape[0]

return weight, model._running_time

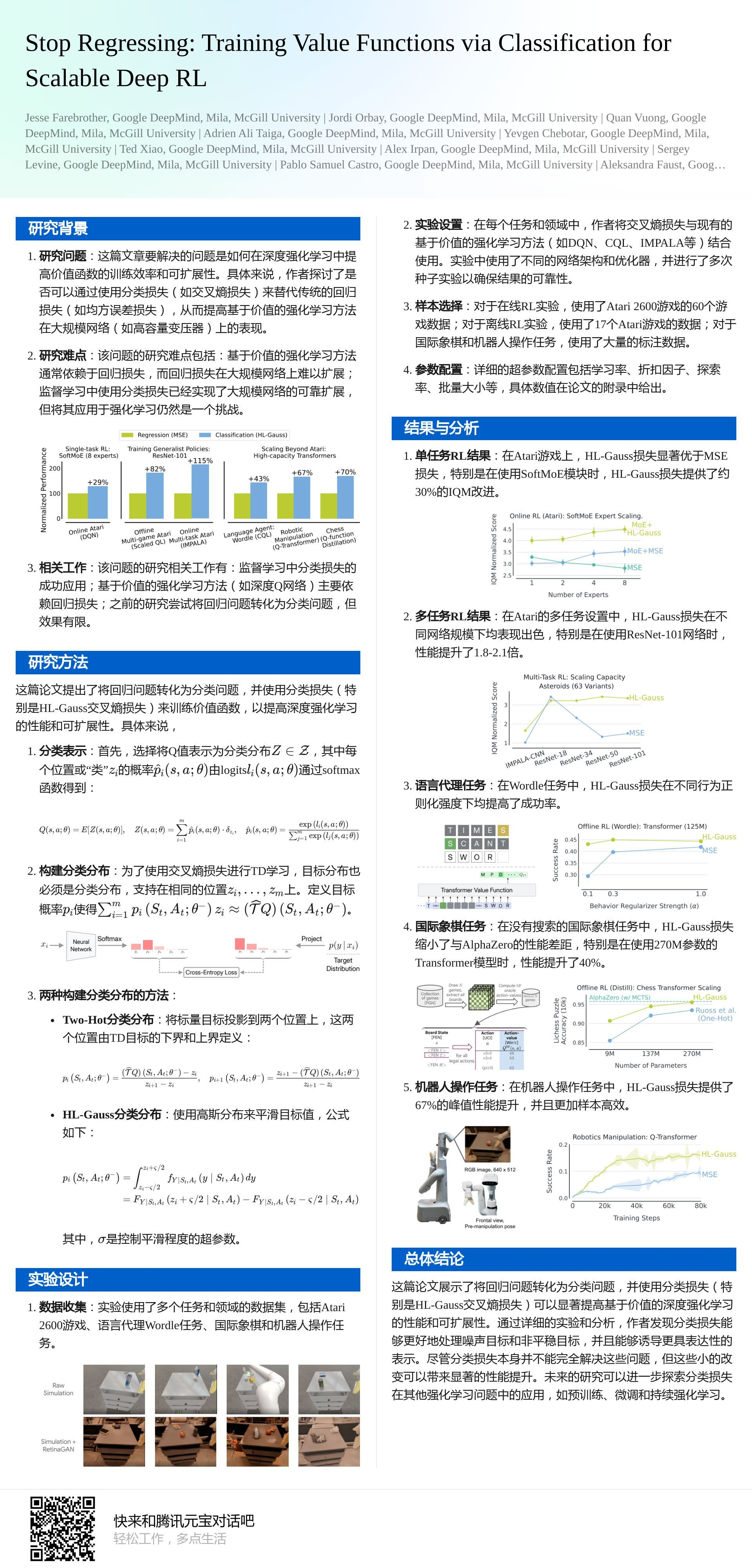

Stop Regressing: Training Value Functions via Classification for Scalable Deep RL

通过分类而非回归来训练价值函数,以提高深度强化学习(RL)的可扩展性。存疑?作者claim的是分类损失函数有助于克服reward中的噪声和dynamics中的稳定性。