一些比较新的,未必有用的论文,仅仅只是mark一下

(1)DIPO: Policy Representation via Diffusion Probability Model for Reinforcement Learning

之前我们看的很多Diffusion Model的论文都是Offline RL的,这篇论文比较有意思,是online model-free的。

model-free online RL problems with the diffusion model

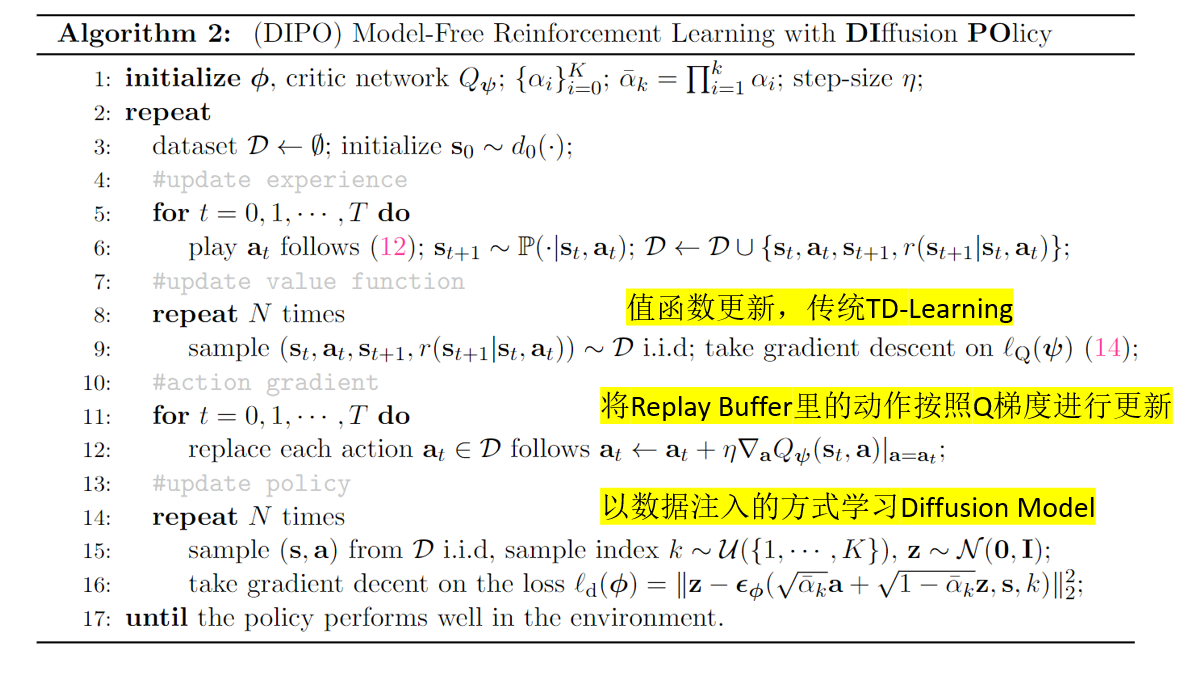

直接看伪代码和代码,随后我们再做点评:

(1)Q函数的学习按照传统的TD-learning,不再赘述

(2)action按照Q梯度上升的方向进行更新(这个没什么难理解的,跟DDPG或者说SAC的策略更新是一样的,只是这边直接用的是离散的动作集合,但是原则上是一样的)

(3)Diffusion按照(s, a)进行监督式学习

整个过程最重要的一点是Diffusion Model的学习其实还是一个监督式的学习方式,毕竟Diffusion更多是一个生成式的模型,其内部的损失无法通过外部的梯度注入,只能是以数据的形式注入。 关键代码片段

def action_gradient(self, batch_size, log_writer):

states, best_actions, idxs = self.diffusion_memory.sample(batch_size)

actions_optim = torch.optim.Adam([best_actions], lr=self.action_lr, eps=1e-5)

for i in range(self.action_gradient_steps):

best_actions.requires_grad_(True)

q1, q2 = self.critic(states, best_actions)

loss = -torch.min(q1, q2)

actions_optim.zero_grad()

loss.backward(torch.ones_like(loss))

if self.action_grad_norm > 0:

actions_grad_norms = nn.utils.clip_grad_norm_([best_actions], max_norm=self.action_grad_norm, norm_type=2)

actions_optim.step()

best_actions.requires_grad_(False)

best_actions.clamp_(-1., 1.)

best_actions = best_actions.detach()

self.diffusion_memory.replace(idxs, best_actions.cpu().numpy())

return states, best_actions

def train(self, iterations, batch_size=256, log_writer=None):

for _ in range(iterations):

# Sample replay buffer / batch

states, actions, rewards, next_states, masks = self.memory.sample(batch_size)

""" Q Training """

current_q1, current_q2 = self.critic(states, actions)

next_actions = self.actor_target(next_states, eval=False)

target_q1, target_q2 = self.critic_target(next_states, next_actions)

target_q = torch.min(target_q1, target_q2)

target_q = (rewards + masks * target_q).detach()

critic_loss = F.mse_loss(current_q1, target_q) + F.mse_loss(current_q2, target_q)

self.critic_optimizer.zero_grad()

critic_loss.backward()

if self.ac_grad_norm > 0:

critic_grad_norms = nn.utils.clip_grad_norm_(self.critic.parameters(), max_norm=self.ac_grad_norm, norm_type=2)

self.critic_optimizer.step()

""" Policy Training """

# 按照Q梯度上升的方向更新缓冲区的动作

states, best_actions = self.action_gradient(batch_size, log_writer)

# 按照数据注入的方式更新策略,Diffusion Model依旧是监督式的学习方式

actor_loss = self.actor.loss(best_actions, states)

self.actor_optimizer.zero_grad()

actor_loss.backward()

if self.ac_grad_norm > 0:

actor_grad_norms = nn.utils.clip_grad_norm_(self.actor.parameters(), max_norm=self.ac_grad_norm, norm_type=2)

self.actor_optimizer.step()

""" Step Target network """

for param, target_param in zip(self.critic.parameters(), self.critic_target.parameters()):

target_param.data.copy_(self.tau * param.data + (1 - self.tau) * target_param.data)

if self.step % self.update_actor_target_every == 0:

for param, target_param in zip(self.actor.parameters(), self.actor_target.parameters()):

target_param.data.copy_(self.tau * param.data + (1 - self.tau) * target_param.data)

self.step += 1

点评:算法思想确实挺简单的,从论文汇报的效果也还行,但是训练时长大概是SAC的4倍,属实很慢,个人觉得Diffusion Model作为policy用在online RL属实是不适配,尽管适合建模多峰复杂分布,但是采样action的速度实在是太慢了。